Can LLMs Improve Like AlphaZero?

The last post discussed different types of chess AIs, such as AlphaZero which played itself chess and mastered the game beyond any human or AI before it, and Maia which just learned from human games and tried to match the human moves. For chess, each approach has its purpose. It seems large language models (LLMs) are limited to the “Maia-style approach” - they learn from humans without identifying good quality and without being able to surpass them. Can LLMs do better?

Human Feedback

ChatGPT was trained with Reinforcement Learning from Human Feedback (RLHF), where they hired people to rate its output so it could learn how to improve. They can also learn from the usage of millions of users, both when they directly rate output (with a thumbs up or down) and based on their “implicit rating” from how they use it. Human feedback helps but it doesn’t scale as well as automatic methods.

Automatically Determining Quality

During the initial training, I think LLMs like ChatGPT treat all text basically the same without giving more weight to higher-quality text. However the AI companies will likely change this as they’re looking to improve it in ways besides just using more data and layers. They could use a variety of signals so that the LLM tries to learn more like high-quality text, such as assigning more weight to text based on:

Upvotes the content received on Reddit and similar sites

How the website with the content ranks online (similar to Google’s PageRank)

Sentiment analysis of the content - e.g. do people say positive things about it

How well-written the content is - GPT-4 could be used to filter out poorly written content so future or domain-specific LLMs only train on well written content

How accurate the website is - GPT-4 can’t be trusted here for specific examples, but it could likely recognize if entire websites are unreliable.

(There’s also the approach of Anthropic where an AI trains itself based on a constitution, see AstralCodexTen.)

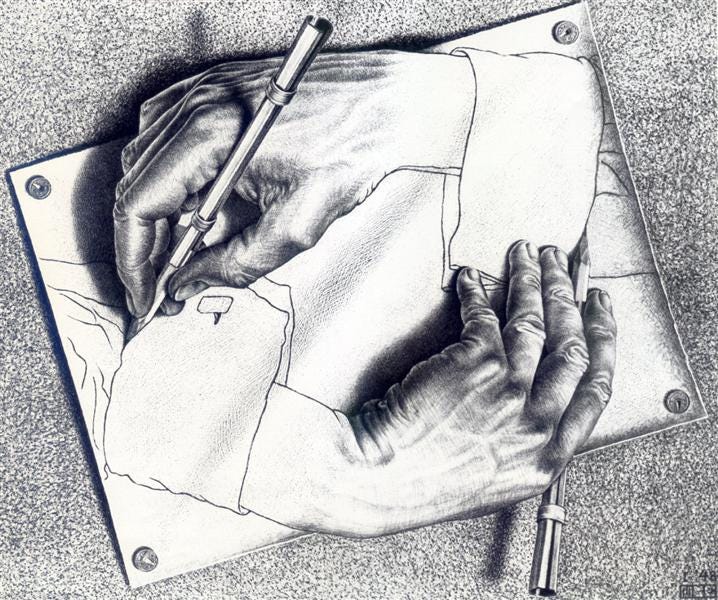

Surpassing humans

The above examples just help filter through human-created content, but can LLMs surpass the output of the best humans? For text in general this doesn’t seem possible under the current paradigm since all it does is learn from human examples. But this could be possible in specific domains where the LLM is able to get additional feedback:

Programming - if one system generates code and another system generates tests, the system could learn over time what kind of code passes tests

Math - the LLM could learn what kind of syntax is valid for the Wolfram math plugin so that over time it can learn to avoid syntax errors. It’s more difficult to evaluate whether the LLM made other kinds of mistakes besides syntax errors, but maybe it can “plug in” an example value to see if it works, just like humans do.

Other services - just like with the math plugin, over time LLMs can learn how to better use other plugins and how to better search the web and other content for information.

More speculatively, perhaps it could try to evaluate its own output in a variety of different ways and learn from it:

When it generates output on its own, could it then go and check Wikipedia or the internet to see how accurate it seems?

Could it rewrite its output in a structured logical form and then try to evaluate that logic?

What are some other possible ways it could evaluate itself and improve?